- Joined

- Aug 6, 2007

- Messages

- 4,525

Its not very accurate, but it works for now. Just gotta live with it until something better comes along.

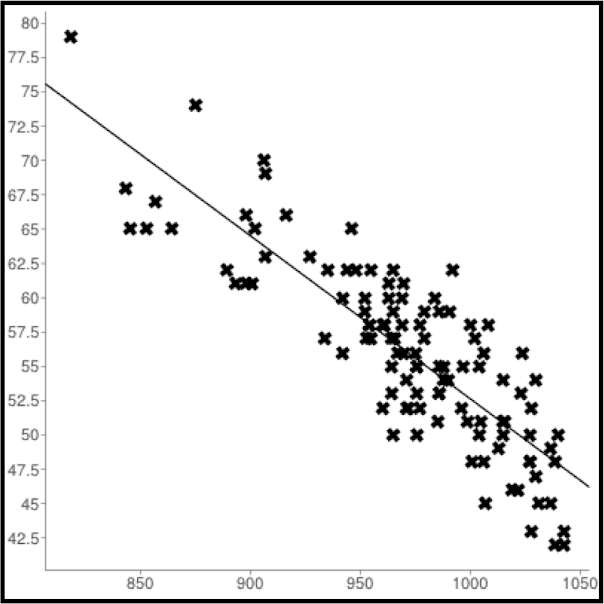

It is actually very accurate and stable.

The variations in SSA that I have observed almost never are more than +/- 1 throw (barring crazy weather or lack of enough propagators to make things statistically valid).

It makes no sense to worry about accuracy of less than +/- 1.49 throws, since that gets rounded to 1 throw.....and there is no way that I know of to throw a disc in less than 1 throw (or a non-integer number of throws).

edit: BTW, this is why I am asking to see the event stats. I want to see examples of variations greater than +/- 1.49.

Last edited: